When Dayo Lawal noticed an unexpected debit on his bank account, he turned to the bank’s chatbot. After all, it is positioned as part of customer service. But the bot offered nothing useful.

“It never helped,” he said.

That dead end pushed him to call customer service. He stayed on hold for 35 minutes, only to be told the debit was for an “accidental double credit” that he said never showed up in his statement. With no resolution from either the bot or the agent, Dayo eventually gave up.

“I had to forfeit the money because I got tired,” he recalled.

Advertisement

He is not alone. Another commercial bank customer said they would rather “spend a whole day at the bank than waste my time with the bot”.

For many, these digital assistants have become less about solving problems and more about rerouting them.

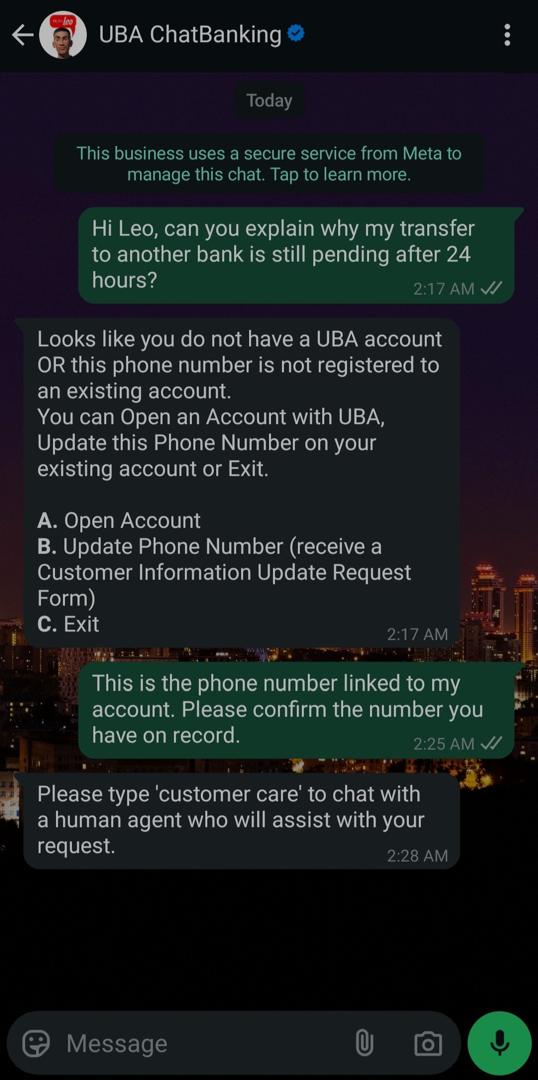

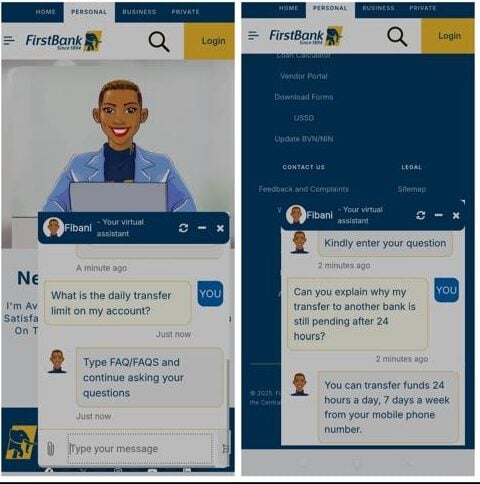

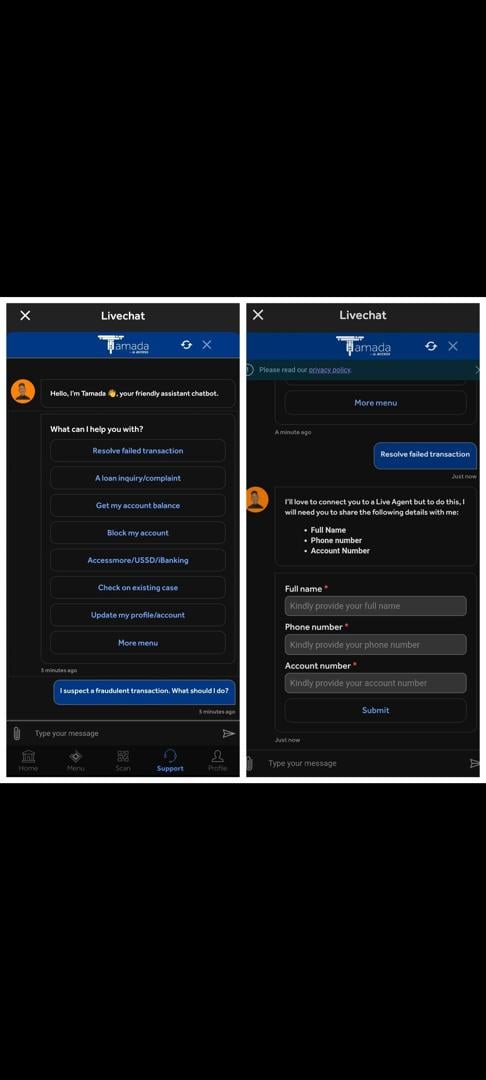

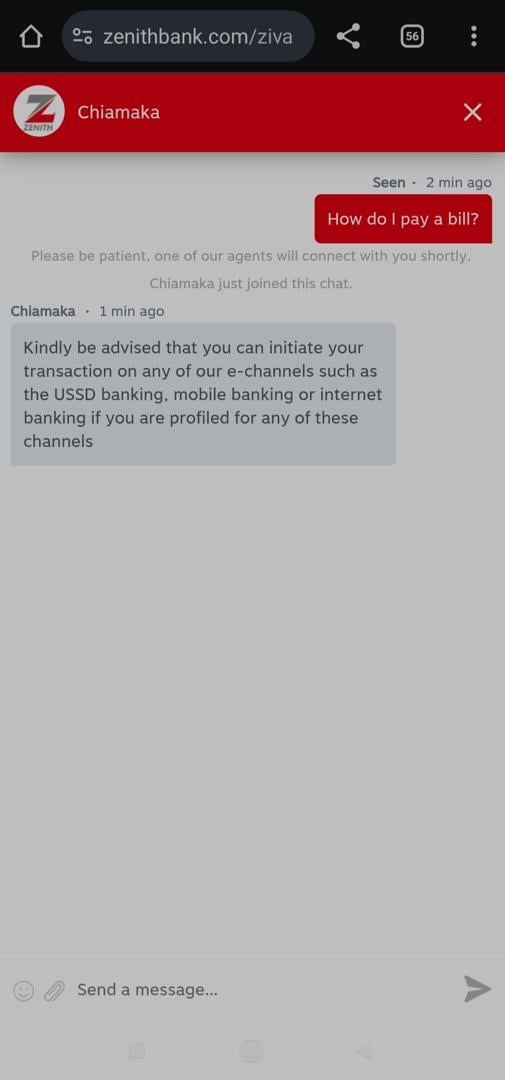

To assess these concerns, TheCable tested the virtual assistants of four leading banks, including FirstBank (Fibani), UBA (Leo), Zenith Bank (Ziva), and Access Bank (Tamada). The goal was to determine whether these chatbots could handle inquiries beyond their pre-programmed scripts and track how well they responded.

Advertisement

The results were consistent across all four banks. The bots cycled users through pre-made menus and repeated redirects rather than giving precise answers.

THE EVASION PLAYBOOK FOR CHATBOT

| Bank (Bot) | Tactic | User Query | Bot Response | Result |

| UBA (Leo) | Menu Redirect | “What’s my savings account interest rate?” | “I am not sure of your request. Please choose from this menu…” | Question ignored. User forced into irrelevant options. |

| FirstBank (Fibani) | FAQ Loop | “Why is my interbank transfer still pending?” | (After FAQ prompt) “You can transfer funds 24/7 from your mobile app.” | Core issue ignored. A generic, unhelpful response was provided. |

| Access Bank (Tamada) | Early Handoff | “How do I pay a new bill provider?” | “Please hold while I connect you to an agent.” [5-minute wait] | The bot acts as an inefficient switchboard, adding significant delay. |

| Zenith Bank (Ziva) | Early Handoff | “How do I pay a bill?” | “Please be patient; an agent will connect with you shortly.” | Swift surrender. No attempt to solve the problem. |

THE THREE TACTICS OF EVASION

The test, carried out using real customer accounts, revealed that the bots do not just fail randomly but in predictable and scripted ways. Their very design makes it impossible to conduct broad inquiries. Each interaction followed a distinct evasion tactic that systematically prevented direct answers, all of which led us to identify three failure modes.

Advertisement

- The menu redirect (UBA’s Leo).

When asked anything outside its script, Leo pushed users back into rigid menus, ignoring the original query. For instance, when asked, “What’s my savings account interest rate?” Leo replied, “I am not sure of your request. Please choose from this menu…” The user was forced into irrelevant options or had to start over.

- The FAQ loop (Fibani of FirstBank)

Fibani trapped users in a never-ending series of FAQ prompts, responding to specific problems with generic, unhelpful platitudes. A user asking, “Why is my interbank transfer still pending after 24 hours?” was prompted to type “FAQ”. Upon doing so, the bot merely stated, “You can transfer funds 24 hours a day, 7 days a week from your mobile phone number”, completely ignoring the core issue.

Advertisement

- The early handoff (Access Bank’s Tamada & Zenith Bank’s Ziva).

A chatbot should be able to provide instant answers to frequently asked questions and only refer customers to a human when required. However, the handoff mechanism seemed to be a first rather than a last resort for bots such as Tamada from Access Bank and Ziva from Zenith Bank. Ziva made no effort to offer guidance when a simple, everyday question like “How do I pay a bill?” was posed. Instead, its prompt and sole response was, “Please be patient; one of our agents will connect with you shortly.” This pattern points to a chatbot system lacking even a rudimentary knowledge base for essential banking operations.

Advertisement

WHY CHATBOTS FAIL

Advertisement

According to experts, the consistent failure stems from strategic underinvestment and a priority on public relations over functionality.

Tunji Onalade, a banking systems developer, said the core issue is a lack of commitment to building a quality product.

Advertisement

“Most banks don’t commit resources to properly training the models. They rely on limited or outdated datasets, so the bots can’t really understand what customers mean,” Onalade said.

“The technical deficiency is often compounded by unstable connections. When the Application Programming Interface (API) connection is weak, the bot just loops the same message or stalls with a ‘please wait’,” the developer said. In such cases, the system cannot fetch real-time data, which leaves customers stranded in repetitive prompts.

He explained that most Nigerian banks rely on a hybrid model. They design custom-built interfaces for branding and basic workflows, but the core intelligence comes from third-party AI platforms such as Microsoft Azure Bot Framework, Google Dialogflow, or IBM Watson. This means that banks control the “look and feel” while outsourcing the heavy lifting of natural language processing and backend orchestration.

Adanne Anene, a product leader who has worked with different Nigerian banks, argued that it reflects a deeper budget problem.

“Banks in Nigeria usually underinvest in chatbot intelligence. It is often seen as a cost centre, not a revenue generator, which limits long-term commitment,” she said.

According to her, most of the budget goes into promotional campaigns rather than sustained upgrades or proper training of the AI.

The marketing-first strategy is clear in the banks’ promotions.

UBA recently announced Leo is the first chatbot in Africa to process cross-border payments on the heels of celebrating 3 million users. FirstBank calls Fibani a “24/7 virtual assistant”, and Access Bank brands Tamada as a “personal banker in your pocket”.

For customers, the result is a broken promise.

INNOVATION GAP

The failure to build competent chatbots represents a key misstep. The global banking AI market is anticipated to grow from $5.13 billion in 2021 to $64.03 billion by 2030. Yet, Nigerian banks are failing to harness this potential.

For instance, despite Access Bank’s partnership to reduce voice response times, our tests found Tamada remained among the slowest and most unreliable for non-menu queries.

The innovation gap is enabled by a regulatory vacuum. While the Central Bank of Nigeria (CBN) has general consumer protection rules, it has not created specific regulations for AI chatbots. This inaction allows banks to implement poor systems that routinely fail customers.

By contrast, foreign authorities are taking action against deceptive AI practices. The US Federal Trade Commission’s (FTC) new Operation AI Comply program seeks to penalise companies that use AI to mislead or harm consumers. The FTC emphasised that “there is no AI exemption from the laws on the books”. Likewise, the Consumer Financial Protection Bureau (CFPB) has warned that companies using poorly designed chatbots risk breaking the law.

Bello Hassan, the Nigeria Deposit Insurance Corporation (NDIC) chief, demanded greater transparency. “As banks deploy AI, they must avoid bias and protect customer data privacy. Transparency in AI decision-making is essential,” he said.

TOWARD A SOLUTION

Despite efforts to appear open, the design of these bots is built to prioritise safety over conversational intelligence, according to Festus Kisu, a developer.

The consequence of this choice results in systems incapable of moving beyond pre-fixed scripts, unable to understand what users really mean, or provide diagnostic depth to resolve complaints.

To improve these systems, Kisu suggests that progress depends on technology that can comprehend user intent rather than just keywords. He argued that this requires investment in natural language processing (NLP). The developer also identified generative AI as a “promising next step”, given its ability to handle open-ended questions and learn from interactions.

However, Kisu warned that adoption might face hurdles like high costs, data privacy concerns, and the need for extensive training data that is specific to Nigeria.

Ultimately, he believes the future of banking AI depends on clearer regulatory guidance and investment in solutions built with Nigerian realities in mind.

Note: The names of the technical experts have been changed for confidentiality.

This report was produced with support from the Centre for Journalism Innovation and Development (CJID).